Coordinate vector

In linear algebra, a coordinate vector is an explicit representation of a Euclidean vector in an abstract vector space as an ordered list of numbers or, equivalently, as an element of the coordinate space Fn. Coordinate vectors allow calculations with abstract objects to be transformed into calculations with blocks of numbers (matrices, column vectors and row vectors).

The idea of a coordinate vector can also be used for infinite dimensional vector spaces, as addressed below.

Contents |

Definition

Let V be a vector space of dimension n over a field F and let

be an ordered basis for V. Then for every  there is a unique linear combination of the basis vectors that equals v:

there is a unique linear combination of the basis vectors that equals v:

The linear independence of vectors in the basis ensures that the α-s are determined uniquely by v and B. Now, we define the coordinate vector of v relative to B to be the following sequence of coordinates:

This is also called the representation of v with respect of B, or the B representation of v. The α-s are called the coordinates of v. The order of the basis becomes important here, since it determines the order in which the coefficients are listed in the coordinate vector.

Coordinate vectors of finite dimensional vector spaces can be represented as elements of a column or row vector. This depends on the author's intention of performing linear transformations by matrix multiplication on the left (pre-multiplication) or on the right (post-multiplication) of the vector. A column vector of length n can be pre-multiplied by any matrix with n columns, while a row vector of length n can be post-multiplied by any matrix with n rows.

For instance, a transformation from basis B to basis C may be obtained by pre-multiplying the column vector ![[v]_B](/2012-wikipedia_en_all_nopic_01_2012/I/c7d7d38d5b104c166ba4ef546787d0cf.png) by a square matrix

by a square matrix ![[M]_{C}^{B}](/2012-wikipedia_en_all_nopic_01_2012/I/3ffd25cb976793637650e6d95899bdb4.png) (see below), resulting in a column vector

(see below), resulting in a column vector ![[v]_C\,](/2012-wikipedia_en_all_nopic_01_2012/I/97297114da73d32fc8fc5b43c611b082.png) :

:

If ![[v]_B](/2012-wikipedia_en_all_nopic_01_2012/I/c7d7d38d5b104c166ba4ef546787d0cf.png) is a row vector instead of a column vector, the same basis transformation can be obtained by post-multiplying the row vector by the transposed matrix

is a row vector instead of a column vector, the same basis transformation can be obtained by post-multiplying the row vector by the transposed matrix ![([M]_{C}^{B})^\mathrm{T}\,](/2012-wikipedia_en_all_nopic_01_2012/I/b71da867a869f92a5af07a513860a3c6.png) to obtain the row vector

to obtain the row vector ![[v]_C\,](/2012-wikipedia_en_all_nopic_01_2012/I/97297114da73d32fc8fc5b43c611b082.png) :

:

The standard representation

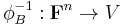

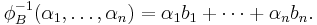

We can mechanize the above transformation by defining a function  , called the standard representation of V with respect to B, that takes every vector to its coordinate representation:

, called the standard representation of V with respect to B, that takes every vector to its coordinate representation: ![\phi_B(v)=[v]_B](/2012-wikipedia_en_all_nopic_01_2012/I/aec67c98d4f26bb883afc484ef0c23a8.png) . Then

. Then  is a linear transformation from V to Fn. In fact, it is an isomorphism, and its inverse

is a linear transformation from V to Fn. In fact, it is an isomorphism, and its inverse  is simply

is simply

Alternatively, we could have defined  to be the above function from the beginning, realized that

to be the above function from the beginning, realized that  is an isomorphism, and defined

is an isomorphism, and defined  to be its inverse.

to be its inverse.

Examples

Example 1

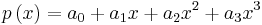

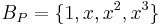

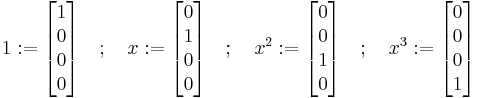

Let P4 be the space of all the algebraic polynomials in degree less than 4 (i.e. the highest exponent of x can be 3). This space is linear and spanned by the following polynomials:

matching

then the corresponding coordinate vector to the polynomial

is

is  .

.

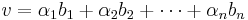

According to that representation, the differentiation operator d/dx which we shall mark D will be represented by the following matrix:

Using that method it is easy to explore the properties of the operator: such as invertibility, hermitian or anti-hermitian or none, spectrum and eigenvalues and more.

Example 2

The Pauli matrices which represent the spin operator when transforming the spin eigenstates into vector coordinates.

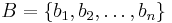

Basis transformation matrix

Let B and C be two different bases of a vector space V, and let us mark with ![[M]_{C}^{B}](/2012-wikipedia_en_all_nopic_01_2012/I/3ffd25cb976793637650e6d95899bdb4.png) the matrix which has columns consisting of the C representation of basis vectors b1, b2, ..., bn:

the matrix which has columns consisting of the C representation of basis vectors b1, b2, ..., bn:

This matrix is referred to as the basis transformation matrix from B to C, and can be used for transforming any vector v from a B representation to a C representation, according to the following theorem:

If E is the standard basis, the transformation from B to E can be represented with the following simplified notation:

where

![v = [v]_E, \,](/2012-wikipedia_en_all_nopic_01_2012/I/52fdfc4b0f5a89bc670a30fad24b1c34.png) and

and

Corollary

The matrix M is an invertible matrix and M-1 is the basis transformation matrix from C to B. In other words,

Remarks

- The basis transformation matrix can be regarded as an automorphism over V.

- In order to easily remember the theorem

-

![[v]_C = [M]_{C}^{B} [v]_B,](/2012-wikipedia_en_all_nopic_01_2012/I/0d25c4020dc74aeaa5a85637c9d73028.png)

- notice that M 's superscript and v 's subscript indices are "canceling" each other and M 's subscript becomes v 's new subscript. This "canceling" of indices is not a real canceling but rather a convenient and intuitively appealing, although mathematically incorrect, manipulation of symbols, permitted by an appropriately chosen notation.

Infinite dimensional vector spaces

Suppose V is an infinite dimensional vector space over a field F. If the dimension is κ, then there is some basis of κ elements for V. After an order is chosen, the basis can be considered an ordered basis. The elements of V are finite linear combinations of elements in the basis, which give rise to unique coordinate representations exactly as described before. The only change is that the indexing set for the coordinates is not finite. Since a given vector v is a finite linear combination of basis elements, the only nonzero entries of the coordinate vector for v will be the nonzero coefficients of the linear combination representing v. Thus the coordinate vector for v is zero except in finitely many entries.

The linear transformations between (possibly) infinite dimensional vector spaces can be modeled, analogously to the finite dimensional case, with infinite matrices. The special case of the transformations from V into V is described in the full linear ring article.

![[v]_B = (\alpha _1, \alpha _2, \cdots, \alpha _n)](/2012-wikipedia_en_all_nopic_01_2012/I/65c952967bea9ab5c6abfb4c91f0c99d.png)

![[v]_C = [M]_{C}^{B} [v]_B.](/2012-wikipedia_en_all_nopic_01_2012/I/4db0d0ac28089fe3cde2dc491176dfd3.png)

![[v]_C= [v]_B ([M]_{C}^{B})^\mathrm{T}.](/2012-wikipedia_en_all_nopic_01_2012/I/64f4e296158d462b7f5ec67280d43a17.png)

![Dp(x) = P'(x) \quad�; \quad [D] =

\begin{bmatrix}

0 & 1 & 0 & 0 \\

0 & 0 & 2 & 0 \\

0 & 0 & 0 & 3 \\

0 & 0 & 0 & 0 \\

\end{bmatrix}](/2012-wikipedia_en_all_nopic_01_2012/I/77b7bb6676bc228a066deec6f9c35cfa.png)

![[M]_{C}^{B} =

\begin{bmatrix} \ [b_1]_C & \cdots & [b_n]_C \ \end{bmatrix}](/2012-wikipedia_en_all_nopic_01_2012/I/f57692707ed64b30d52c97b3806e9ebc.png)

![v = [M]^B [v]_B. \,](/2012-wikipedia_en_all_nopic_01_2012/I/73f346bb56172e54d5cc91f90c5559a2.png)

![[M]^B = [M]_{E}^B.](/2012-wikipedia_en_all_nopic_01_2012/I/1ee90760f3782d924eb6415a77dfa2d1.png)

![[M]_{C}^{B} [M]_{B}^{C} = [M]_{C}^{C} = \mathrm{Id}](/2012-wikipedia_en_all_nopic_01_2012/I/ad0ac70615f5dca77afaf0ea4d7da456.png)

![[M]_{B}^{C} [M]_{C}^{B} = [M]_{B}^{B} = \mathrm{Id}](/2012-wikipedia_en_all_nopic_01_2012/I/985e79097418514800b6f3e02fd03f3a.png)